In this guide, we'll show you how run custom code between two LLM calls.

This pipeline is a simple example of "function calling" or "tool use" on Substrate. For advanced situations, we can use the ComputeJSON node to generate a function call, and the If node to conditionally execute branches of a graph.

A JS code interpreter is coming soon. We're also designing the right abstractions for code execution in a stateful container. Let us know (opens in a new tab) if you're interested!

Before we begin, we'll define a function that runs an image search on serper.dev (opens in a new tab). Later, we'll use RunPython to run this locally defined function in a remote Python sandbox.

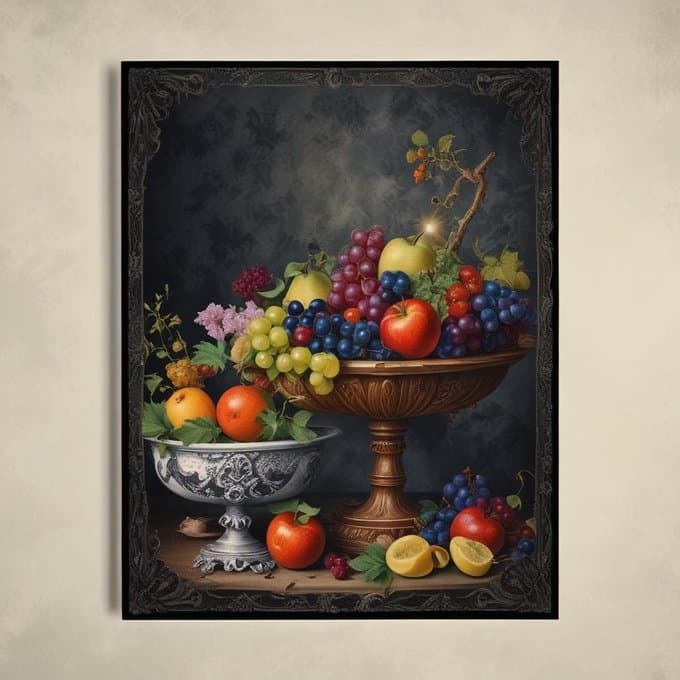

Now begins our Substrate pipeline. First, we ask an LLM to generate a unique image search query for a simple prompt "bowl of fruit". The LLM comes up with interesting queries, like "vintage macabre still life fruit bowl".

Next, we call RunPython with the search function we defined above. We provide the query generated by the LLM along with API credentials, and we install the requests package in the container.

Our search function returns a small thumbnail image. In the final two steps of this pipeline, we upscale the image using UpscaleImage, and then "inpaint" it (filling in details following the prompt) using InpaintImage. Finally, we call substrate.run with the terminal node, inpaint.

The final result looks quite good, but has lost some details from the original thumbnail image. By switching to StableDiffusionXLInpaint and lowering the strength parameter, we can produce a result closer to the original image.

You can try running this example yourself by forking on Replit: